Linux (CentOS6) traffic control features of iproute2 are not active by default, and are usually implemented to aid traffic flow over lower bandwidth WAN connections such as aDSL. In this article I will describe how I implemented them on high end systems with multiple gigabit interfaces with bonded interfaces (often also known and LACP, LAG or port channel groups) and VLAN interfaces.

Why would a system with all that bandwidth need to control traffic? Elephants. Big data jumbo hadoop HDFS elephants.

Hadoop nodes are frequently subjected to multiple parallel HDFS retrieval requests which can peg the interfaces with outbound traffic for minutes at a time. In the case of the systems discussed here, they are configured with two gigabit interfaces in an active-active bond (mode 4 LACP) with a vlan interface on top – all running 9KB jumbo frames. When they had one of these outbound traffic bursts ping loss would be detected, as well as huge latencies (over 250ms was not uncommon) and ssh login sessions might time out and, worse, job control connections could die. The switches and switch interconnects were not significantly loaded – this correlated exactly with 100% utilization of the outbound interface on the impacted server.

Upgrading >250 nodes to 10G and the switches to go with it would not be a quick or inexpensive solution to this. Providing a “truck lane” for the HDFS data transfers eliminates these negative effects for free.

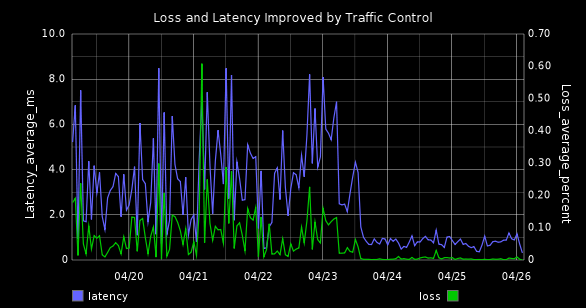

It worked. This graph shows the improvement in average packet loss and latency as measured by pings from each node in the cluster to an idle system on the same network. The benefits are even more visible on individual systems during their traffic bursts – what used to be horrible is now almost invisible. I think the remaining relatively minimal drops and latency are due to sporadic congestion on the inbound path to the servers and in the inter-switch trunks which do not (yet) have the benefit of this priority queuing.

Getting this to work was tricky and I tried various permutations before finding one that worked reliably.

One finding was that the “traffic shaping” qdiscs are not designed to work at high bandwidth. They do their adjustments every jiffy (0.01s) which is nice and short for a modem or DSL connection, but way too much data passes by in that time on the links we have here. (ref)

This leaves us with the pure traffic prioritization qdiscs. Most of the info I found online described pfifo_fast as being the default qdisc, and it looks like it is just what I needed to make a truck lane. Unfortunately it was not the default on our multiqueue capable interfaces – our default was the mq qdisc about which I found little documentation or online reference.

After looking at the options I chose the PRIO qdisc with 4 bands. Band 4 to be the truck lane, band 3 for the default, band 2 for higher priority and band 1 for essential stuff line ssh. PRIO will handle band 1 first and only move to band 2 when there is no band 1 traffic and so forth. Unlike shaping qdiscs which have allocated bandwidths it will completely starve the lower priority bands if the higher priority bands fill up the pipe, so it is essential to put the big stuff in the lower priority (higher number) bands.

The trick in applying this in a bonded or vlan interface configuration is to apply this qdisc to all the interfaces involved. The outbound traffic is processed through the vlan interface, then the bond and finally through the physical interface – and each has its own qdisc. If any of these are missed, the behavior reverts to a single fifo at the interface lacking the PRIO qdisc.

/sbin/tc qdisc del dev $i root

/sbin/tc qdisc add dev $i root handle 1: prio bands 4 priomap 2 3 3 3 2 3 1 1 2 2 2 2 2 2 2 2

/sbin/tc qdisc add dev $i parent 1:1 handle 10: pfifo limit 127

/sbin/tc qdisc add dev $i parent 1:2 handle 20: pfifo limit 127

/sbin/tc qdisc add dev $i parent 1:3 handle 30: pfifo limit 127

/sbin/tc qdisc add dev $i parent 1:4 handle 40: pfifo limit 127

I adapted the network init script to iterate through the interfaces and apply this at system startup. Get that here.trafficcontrol

The priomap is used to map the linux traffic priorities to the bands, and the default matches the default 3 bands of the PRIO qdisc and is inherited from pfifo_fast. As I have added an additional high priority band I have incremented the values by one.

The documentation on how the priomap is used had me scratching my head for a bit, as at first reading I thought the numbers in the “Band” column represented the priomap. They do not. If you use the priomap as a zero-indexed lookup table, and use the values in the “Linux” columns as indices, you get the values in the “Band” column. As all this refers to mapping to the obsolete TOS value, I’m not sure it has any relevance. However if any of the Linux priority is active, then my incremented priomap should maintain backward compatibility.

With the bands created with fifos on them, we can use iptables mangle to push the default traffic to band 3 and the HDFS traffic to band 4. I chose to tag the traffic with DSCP values from the Cisco Enterprise QoS Solution Reference Network Design Guide and then classify the traffic to the bands based on the DSCP values.

*mangle

:PREROUTING ACCEPT

:INPUT ACCEPT

:FORWARD ACCEPT

:OUTPUT ACCEPT

:POSTROUTING ACCEPT

-A POSTROUTING -p tcp -m tcp --dport 50010 -j DSCP --set-dscp-class af11

-A POSTROUTING -p tcp -m tcp --sport 50010 -j DSCP --set-dscp-class af11

-A POSTROUTING -p tcp -m dscp --dscp 0 -j CLASSIFY --set-class 0001:0003

-A POSTROUTING -p tcp -m dscp --dscp-class af11 -j CLASSIFY --set-class 0001:0004

COMMIT

This has the benefit of tagging the traffic with the DSCP AF11 “bulk traffic” value on the network. This will allow the network equipment to also implement priority queues as they push the traffic out of their (potentially pegged) ports towards its destination. That will be the topic of a future article.